Terrain and Fog Rendering

Created for the "Real-Time Rendering" course at the University of Koblenz

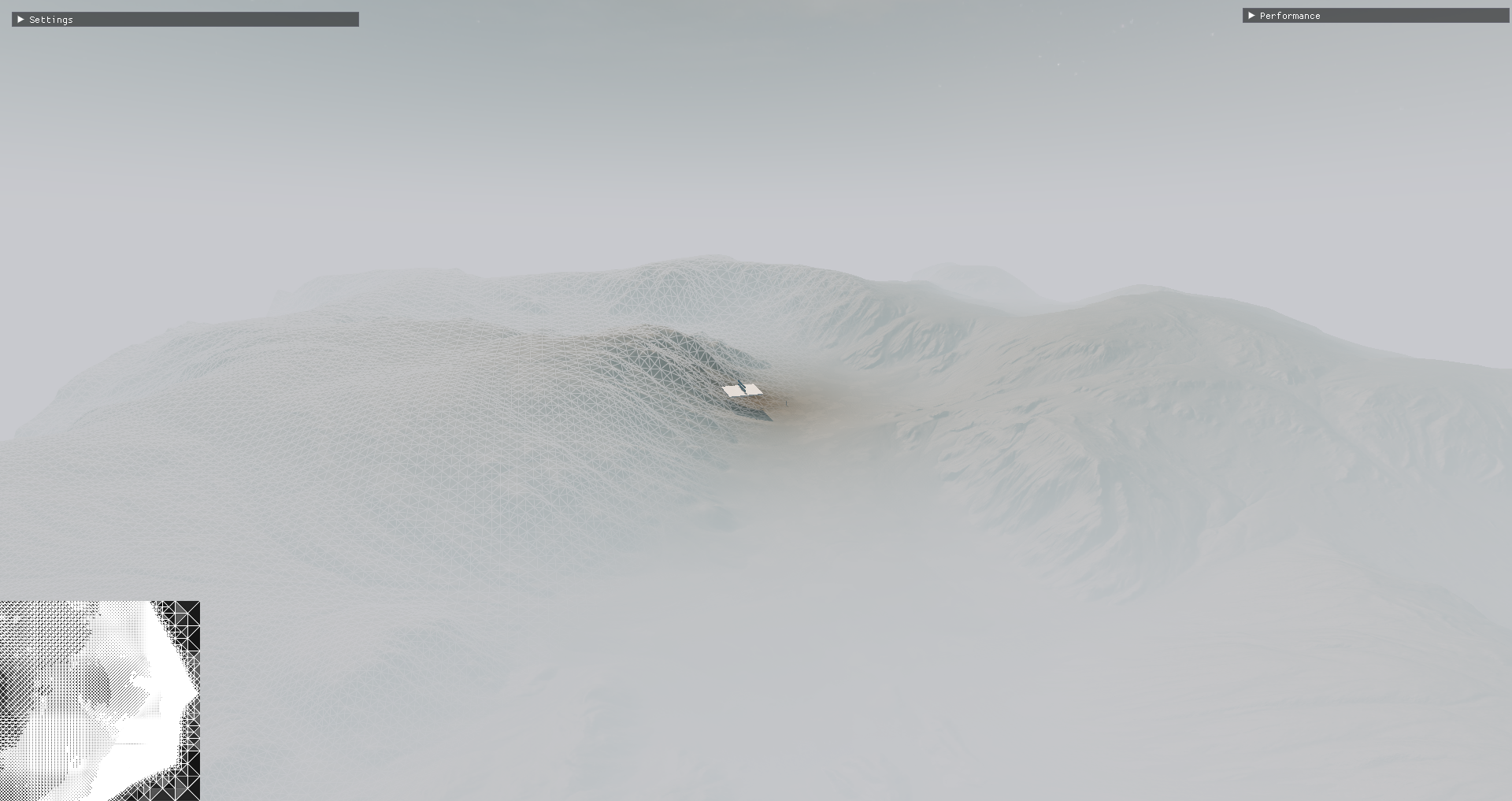

Terrain

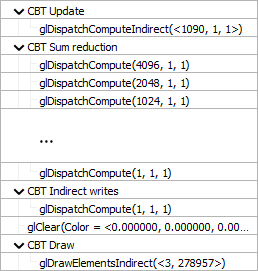

I was responsible for implementing the Concurrent Binary Tree data structure to manage/update the terrain mesh, as well as the material/shader setup for rendering it.

All of the logic for updating the terrain mesh is implemented in a series of compute shaders following the paper.

NOTE: The sum reduction pass is far from optimized!

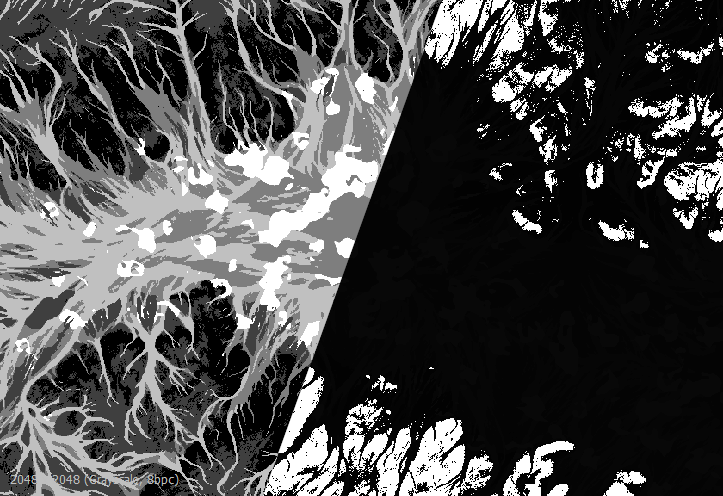

For the terrain shader I wanted to try out an index-Map based approach inspired by a GDC Presentation about the terain in Ghost Recon Wildlands where each texel in an unsigned integer texture holds an index into a material array. The most significant bit indicates whether or not the area represented by the texel is sloped enough to require biplanar mapping.

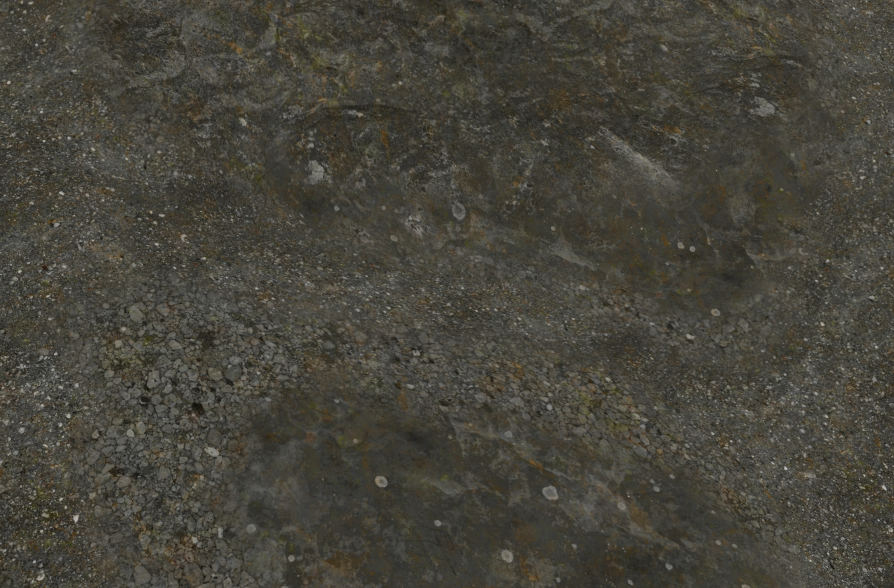

Sampling the material textures according to that index then results in:

Sampling the material textures according to that index then results in:

And interpolating materials based on the nearest four indices:

And interpolating materials based on the nearest four indices:

Since there was no virtual texturing solution in place, and the results of this blend couldnt be cached at a high enough resolution without it, the full logic needed to be run for every pixel of the terrain every frame. Since thats obviously quite expensive some architectural changes needed to be made. Simply switching from forward to deferred rendering wasnt enough since shading was less of a bottleneck compared to overdraw resulting in lots of wasted material logic. Because of that I decided to implement a “UV-Buffer”-like solution, storing the non-displaced vertex positions (since that is what was used for mapping the textures) aswell as their quad derivatives (packed into a 1 additional texture).

vec3 dPdx = dFdx(worldPosNoDisplacement);

vec3 dPdy = dFdy(worldPosNoDisplacement);

uint packeddX = packHalf2x16(vec2(dPdx.x, dPdy.x));

uint packeddY = packHalf2x16(vec2(dPdx.y, dPdy.y));

uint packeddZ = packHalf2x16(vec2(dPdx.z, dPdy.z));That guarantees that not just the shading but also the material texture fetches only happen for visible fragments.

I also tried to be smart and sort the pixels into groups depending on how many texture fetches need to happen, in an attempt to reduce divergence inside the workgroups. But that turned out to be overengineering as the lack of high frequency detail in the index-map meant that most pixels only needed to go through one of the two fast passes anyways and divergence wasnt really a problem to begin with:

MaterialAttributes attributes;

//Check if pixel requires just a single material lookup

if(materialIDs[0] == materialIDs[1] && materialIDs[1] == materialIDs[2] && materialIDs[2] == materialIDs[3])

{

if((materialIDs[0] & 128u) == 128u)

{

attributes = biplanarSampleOfMaterialAttributesFromID(materialIDs[0] & 0x7F, ...);

}

else

{

attributes = flatSampleOfMaterialAttributesFromID(materialIDs[0] & 0x7F, ...);

}

}Nevertheless, it was a massive performance win. Especially with small triangle sizes and view-angles where the binary tree structure resulted in mostly back-to-front sorted triangles.

Fog

The main feature is a multiple-scattering approximation on top of an analytic exponential height fog.

It works by storing any light that is “lost” due to out-scattering in an additional rendertarget which is then blurred in the same way as bloom in Call of Duty Advanced Warfare and finally added back to the “basic” fog, weighted by the amount of fog along the view vector.

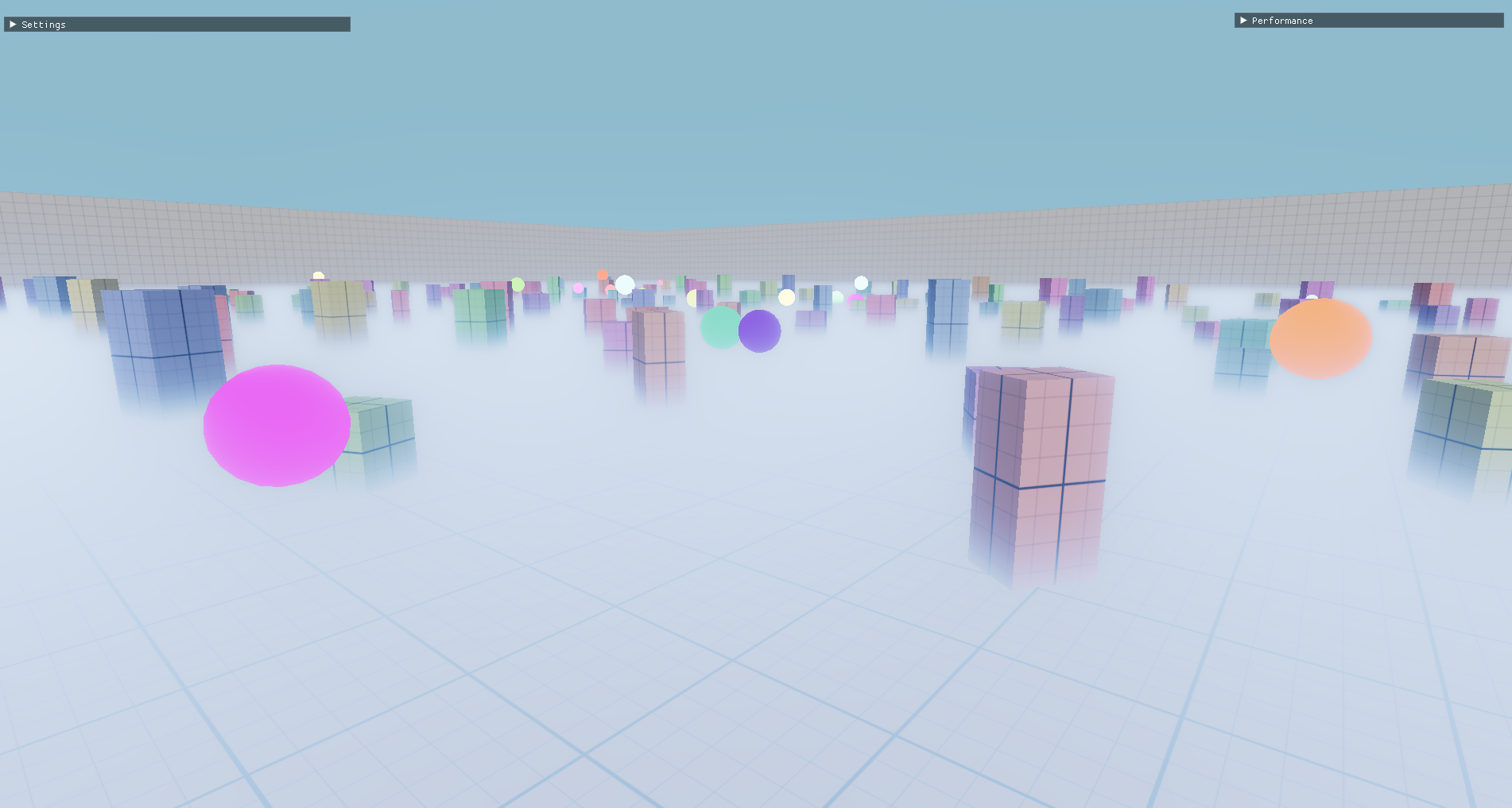

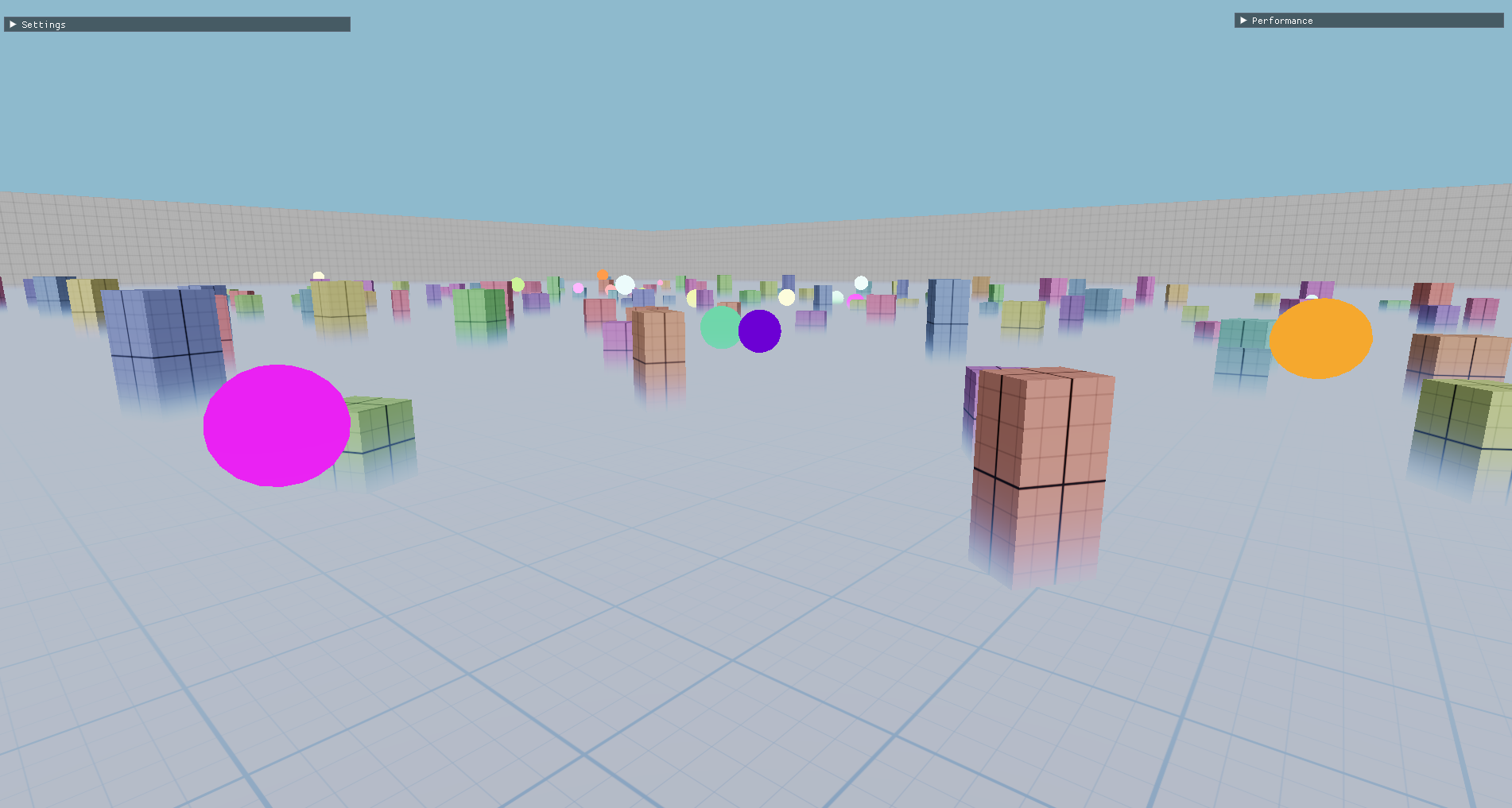

The result of this can best be seen in a test environment with more depth variation:

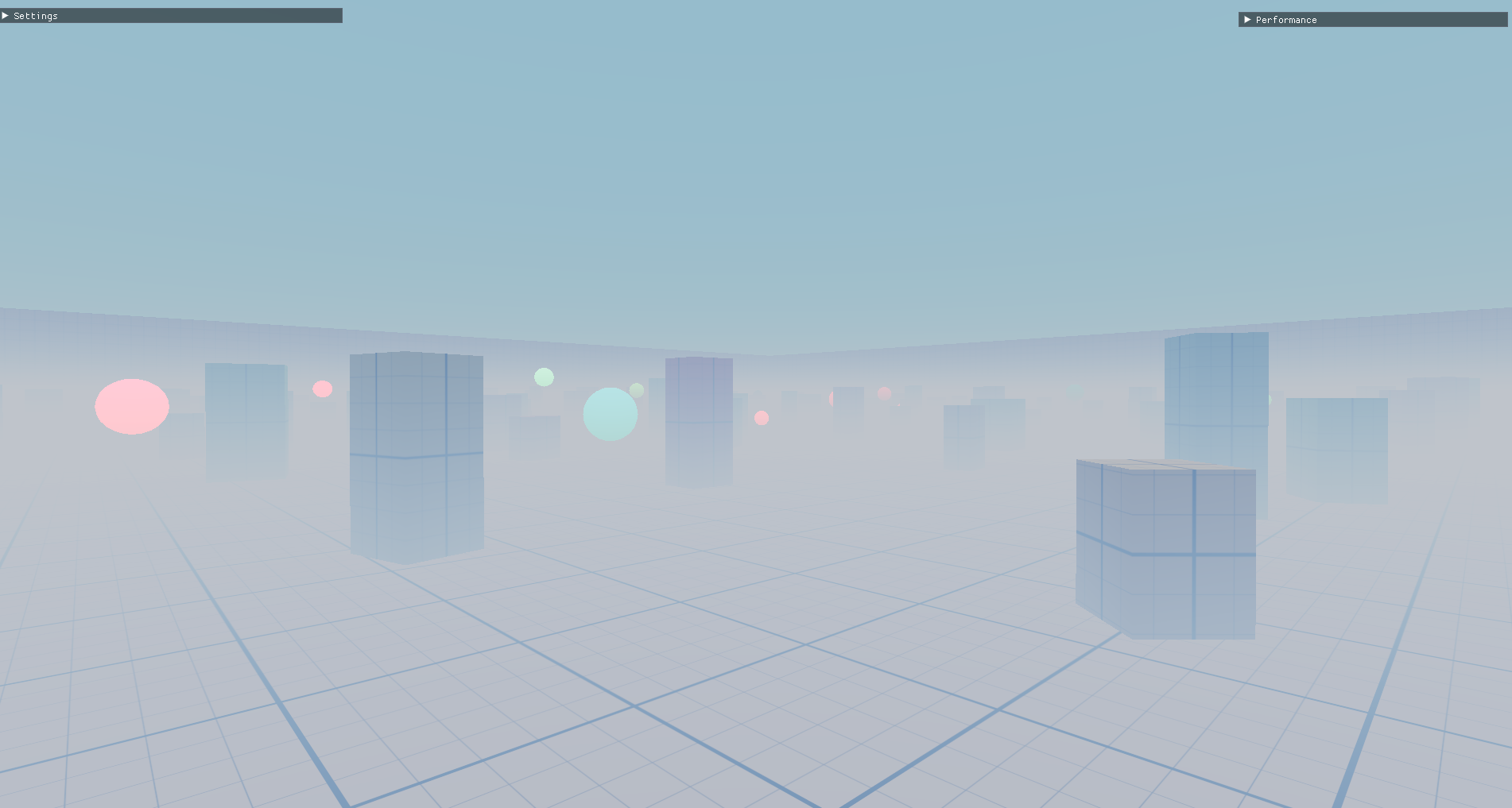

without in-scattering:

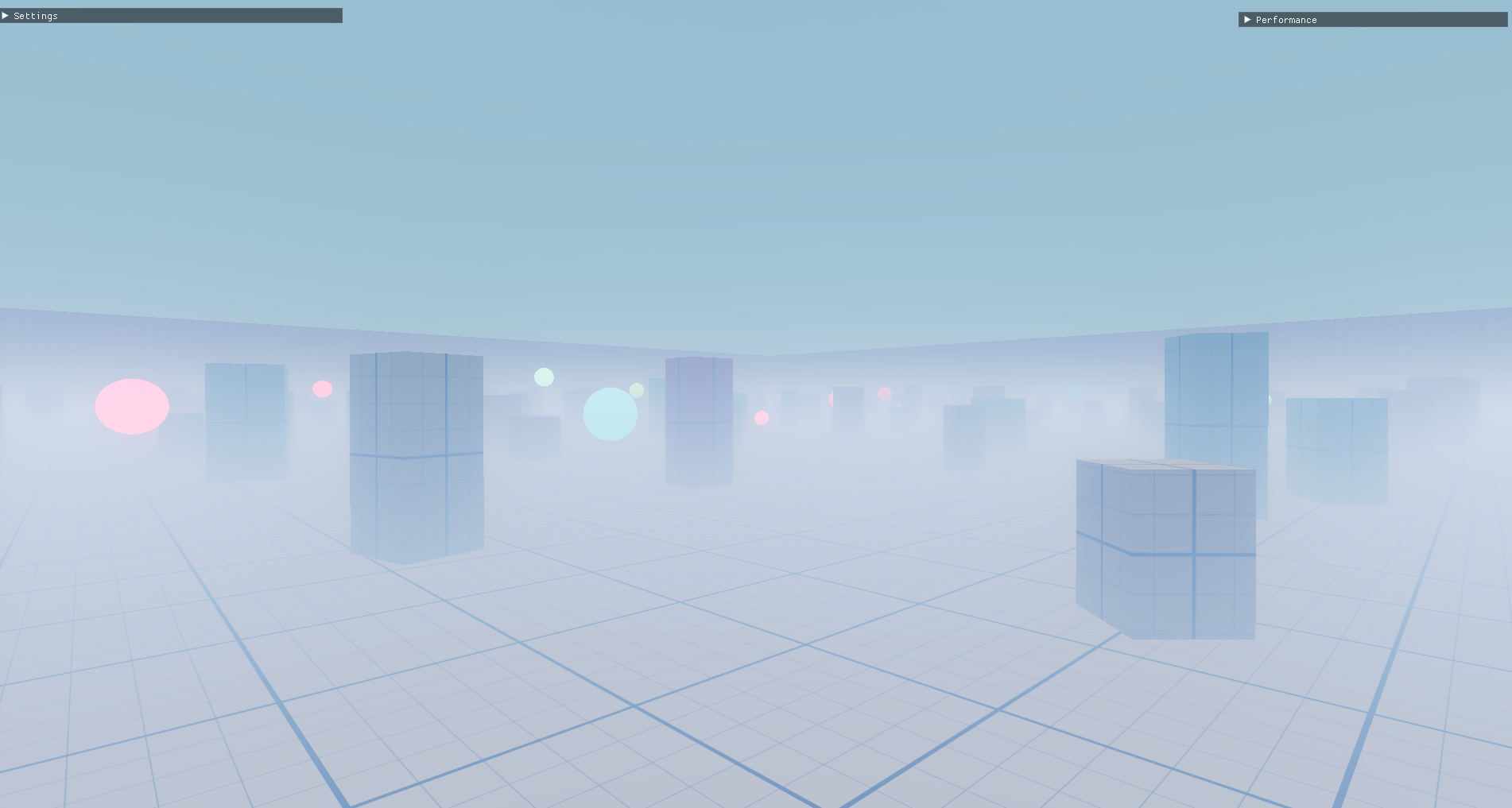

with in-scattering:

with in-scattering:

without in-scattering:

without in-scattering:

with in-scattering:

with in-scattering: